Drawing the Frame¶

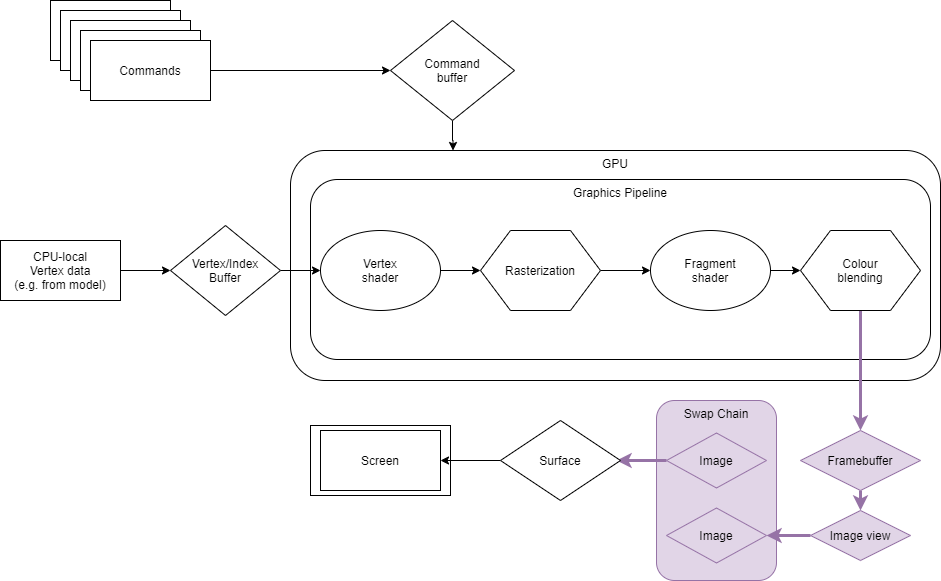

Once the commands have been recorded, they then need to be submitted and presented to the screen every frame.

This section will demonstrate how the example application finally produces some visible output on the screen. As with recording the command buffers, this stage of the application is relatively straightforward thanks to the setup work done during the initialisation stages.

One of the most important elements of drawing the frame is ensuring proper synchronisation. The previously created semaphores and fences are put to use here to ensure acquiring, rendering, and presenting images to the screen happens in the correct order.

Many of the commands used below have some combination of a wait semaphore field, a signal semaphore field, and a signal fence field.

The wait semaphore field instructs the command to wait for that semaphore to be signalled before beginning execution.

The signal semaphore and signal fence fields instruct the command to signal that semaphore or fence after it has finished executing.

In the example code, drawFrame() is called in a loop to update the triangle on the screen as it rotates.

The following is a high-level overview of the drawFrame() function:

Wait for the fence to be signalled before rendering.

This ensures the command buffer for the previous frame has finished executing before the application starts using resources that might still be in use by the GPU. This also controls the speed of the CPU side of the application.

Acquire the next image in the swapchain.

The

vkAcquireNextImageKHR()function blocks until an image is available from the swapchain or an error is returned.This command has a signal semaphore which signals when an image has been acquired successfully. This ensures that the image is available for rendering before the application submits a command buffer to begin that render.

Rotate the triangle.

The vertices of the triangle are calculated in three dimensions, but this is mostly for illustrative purposes as the triangle is just a 2D shape shown on a 2D surface. A transformation matrix is used to transform the triangle’s vertices and apply the rotation. This matrix is the product of a rotation and a projection matrix.

A new rotation matrix is calculated before a frame is rendered using an angle value, which is incremented on each frame.

The projection matrix only needs to be calculated once, during initialisation, because the transformation it defines is fixed.

The new transformation matrix is written into a dynamic uniform buffer, where it can be accessed from the vertex shader.

Submit the command buffer to the graphics queue.

This begins the rendering process.

At this point, the wait and signal semaphores are passed as parameters to the submit command. As mentioned, these are used to control the synchronisation of the GPU operations.

The wait semaphore instructs the submission process to wait until the image has been acquired.

The rendering semaphore is signalled by this command and will be waited on by the presentation stage.

A fence is also supplied when the command buffer is submitted. When the execution of this command buffer is finished, the fence signals back to step 1 of subsequent frames.

Present the final rendered image to the screen.

Presenting the image returns ownership of this image back to the swapchain, communicating that it is ready for presenting.

The order in which these images are presented to the surface is determined by the swapchain’s presentation mode; this was set during swapchain creation. The preferred presentation mode in this application is VK_PRESENT_MODE_FIFO_KHR, because it is the only mode guaranteed to be supported. In a triple-buffering scenario, this mode allows maximum performance without screen tearing while still allowing the GPU to save power by presenting only as many images to the display as it can physically present.

The rendering semaphore, which was used as the signal semaphore of the previous command, is now passed as the wait semaphore of this command. This ensures that rendering has been completed before the image can be presented.

Update the

frameId.This tracks which image/command buffer associated with the frame is being rendered in the current loop.

This is incremented at the end of each call to

drawFrame(), and wraps when it exceeds the total number of images in the swapchain.

View applyRotation() in context.

void VulkanHelloAPI::drawFrame()

{

// Wait for the fence to be signalled before starting to render the current frame, then reset it so it can be reused.

debugAssertFunctionResult(vk::WaitForFences(appManager.device, 1, &appManager.frameFences[frameId], true, FENCE_TIMEOUT), "Fence - Signaled");

vk::ResetFences(appManager.device, 1, &appManager.frameFences[frameId]);

// currentBuffer will be used to point to the correct frame/command buffer/uniform buffer data.

// It is going to be the general index of the data being worked on.

uint32_t currentBuffer = 0;

VkPipelineStageFlags pipe_stage_flags = VK_PIPELINE_STAGE_COLOR_ATTACHMENT_OUTPUT_BIT;

// Acquire and get the index of the next available swapchain image.

debugAssertFunctionResult(

vk::AcquireNextImageKHR(appManager.device, appManager.swapchain, std::numeric_limits<uint64_t>::max(), appManager.acquireSemaphore[frameId], VK_NULL_HANDLE, ¤tBuffer),

"Draw - Acquire Image");

// Use a helper function with the current frame index to calculate the transformation matrix and write it into the correct

// slice of the uniform buffer.

applyRotation(currentBuffer);

// Submit the command buffer to the queue to start rendering.

// The command buffer is submitted to the graphics queue which was created earlier.

// Notice the wait (acquire) and signal (present) semaphores, and the fence.

VkSubmitInfo submitInfo = {};

submitInfo.sType = VK_STRUCTURE_TYPE_SUBMIT_INFO;

submitInfo.pNext = nullptr;

submitInfo.pWaitDstStageMask = &pipe_stage_flags;

submitInfo.waitSemaphoreCount = 1;

submitInfo.pWaitSemaphores = &appManager.acquireSemaphore[frameId];

submitInfo.signalSemaphoreCount = 1;

submitInfo.pSignalSemaphores = &appManager.presentSemaphores[frameId];

submitInfo.commandBufferCount = 1;

submitInfo.pCommandBuffers = &appManager.cmdBuffers[currentBuffer];

debugAssertFunctionResult(vk::QueueSubmit(appManager.graphicQueue, 1, &submitInfo, appManager.frameFences[frameId]), "Draw - Submit to Graphic Queue");

// Queue the rendered image for presentation to the surface.

// The currentBuffer is again used to select the correct swapchain images to present. A wait

// semaphore is also set here which will be signalled when the command buffer has

// finished execution.

VkPresentInfoKHR presentInfo;

presentInfo.sType = VK_STRUCTURE_TYPE_PRESENT_INFO_KHR;

presentInfo.pNext = nullptr;

presentInfo.swapchainCount = 1;

presentInfo.pSwapchains = &appManager.swapchain;

presentInfo.pImageIndices = ¤tBuffer;

presentInfo.pWaitSemaphores = &appManager.presentSemaphores[frameId];

presentInfo.waitSemaphoreCount = 1;

presentInfo.pResults = nullptr;

debugAssertFunctionResult(vk::QueuePresentKHR(appManager.presentQueue, &presentInfo), "Draw - Submit to Present Queue");

// Update the frameId to get the next suitable one.

frameId = (frameId + 1) % appManager.swapChainImages.size();

}

void VulkanHelloAPI::applyRotation(int idx)

{

// Calculate the offset.

VkDeviceSize offset = (appManager.offset * idx);

// Update the angle of rotation and calculate the transformation matrix using the fixed projection

// matrix and a freshly-calculated rotation matrix.

appManager.angle += 0.02f;

auto rotation = std::array<std::array<float, 4>, 4>();

rotateAroundZ(appManager.angle, rotation);

auto mvp = std::array<std::array<float, 4>, 4>();

multiplyMatrices(rotation, viewProj, mvp);

// Copy the matrix to the mapped memory using the offset calculated above.

memcpy(static_cast<unsigned char*>(appManager.dynamicUniformBufferData.mappedData) + appManager.dynamicUniformBufferData.bufferInfo.range * idx, &mvp, sizeof(mvp));

VkMappedMemoryRange mapMemRange = {

VK_STRUCTURE_TYPE_MAPPED_MEMORY_RANGE,

nullptr,

appManager.dynamicUniformBufferData.memory,

offset,

appManager.dynamicUniformBufferData.bufferInfo.range,

};

// ONLY flush the memory if it does not support VK_MEMORY_PROPERTY_HOST_COHERENT_BIT.

if ((appManager.dynamicUniformBufferData.memPropFlags & VK_MEMORY_PROPERTY_HOST_COHERENT_BIT) == 0) { vk::FlushMappedMemoryRanges(appManager.device, 1, &mapMemRange); }

}

The helper method applyRotation() is called every frame to update the dynamic uniform buffer with the new rotation value. An offset is used to point to the correct slice of the buffer that corresponds to the current frame. The current frame is specified by the idx parameter.

This memory is mapped persistently, so there is no need to map it again on every frame. The pointer to this consistently mapped memory is the variable appManager.dynamicUniformBufferData.mappedData.