Vulkan Concepts¶

This section outlines the various Vulkan concepts and terms used throughout this tutorial, while also serving as a flowchart to help developers understand the steps taken to go from code to on-screen render.

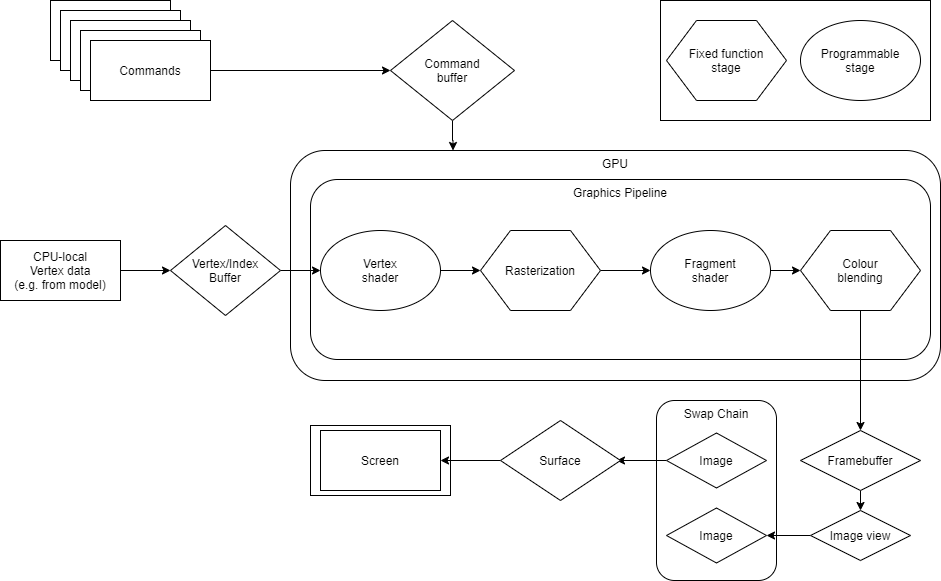

The following diagram shows the logical datapath of each frame being drawn in the Vulkan system. This diagram will be referred to during each stage of the rendering process. The individual sections are outlined below.

However, before any of these can be converted to code, a lot of initialisation needs to happen.

Images and Views¶

Vulkan has a concept of images and image views. An image view describes how to access the image and which parts of the image to access. This has specific applications depending on what the image is being used for.

As an example, an image that is treated as a 2D texture with mipmap levels will have a different image view to the final scene being rendered. Specifically, images and buffers are not interchangable; while an image is technically a buffer by way of being an accessible block of raw memory, it has a specific usecase which is immutable. The image view acts as an intermediary between the image and the frambuffer, and their main usage comes in the sampling and binding of textures.

The Swapchain¶

Like in many other graphics APIs, Vulkan uses framebuffers (effectively blocks of memory from where the screen reads the final image) in order to present data to the screen. Like all other graphics APIs, Vulkan uses multiple buffers so that while one buffer is being rendered to, the other can be presented to the display. Vulkan handles this using an object called the swapchain.

Unlike OpenGL, which abstracts multiple buffer rendering behind a single default framebuffer representing the entire swapchain, in Vulkan, each image is represented as its own framebuffer in the code. The swapchain is composed of multiple images that are treated as buffers that the application renders to and presents from.

The swapchain is a part of the application, but can be thought of as a way for the application to get access to images for it to modify and render into, while also allowing the presentation engine to access the presentable images that have already been rendered.

Surfaces¶

A surface is an application-level abstraction of the actual native window; that is, the actual OS-specific part of the screen that is being rendered into, such as the window in a windowing system, or the entire screen for an application running in fullscreen mode. It is the presentation target for images in the swapchain, which act as handles for the surface. Different platforms require their surfaces created and initialised in different ways.

Pipelines¶

A graphics pipeline comprises the stages that operate on the buffers to write data to a swapchain image. It is made up of multiple stages of two types: fixed function and programmable.

In order to process data and render to the swapchain images, most applications go through the graphics pipeline. Below is a simplified outline of an example graphics pipeline.

Vertex Input.

Vertex Shader.

Rasterization.

Fragment Shader.

Colour Blending.

Frame buffer output.

In Vulkan, there is an additional pipeline concept called a VkPipeline. A VkPipeline is an object whose job is to configure the GPU for the type of work being done. There are two flavours of a VkPipeline: graphics and compute.

Note that a VkPipeline and a graphics pipeline are distinct concepts. From this point onwards, when referring to a VkPipeline object, we will use the capitalised term Pipeline. For example: “A graphics Pipeline is the configuration of the graphics pipeline”.

The Vulkan Queues¶

GPUs are asynchronous devices designed for highly parallel workloads commonly found in graphics. The hardware is optimised for functions relevant to the mathematics involved in graphics and rendering. Vulkan works on a system of commands that the application submits to the GPU. These commands can vary in their nature, from setting up memory, to compute calculations, and more.

Commands are stored within command buffers and submitted to a queue, where the driver sends them on to the GPU to perform work. The GPU will execute these commands in whatever order is most efficient, meaning there are no implicit or explicit agreements on what order the tasks will be completed. While this does allow for significant performance optimisations, it also requires the developer to do more work in the form of synchronisation (which other APIs may handle automatically). We will be elaborate on this later.

The queue families available to an application depend on the physical device on which the application is running. There are a number of different queue families, but in the case of this simple application, only the graphics queue needs to be used. However, it is good to be aware of others.

Command Buffers¶

There is a translation from Vulkan API calls to the work that gets sent to the GPU. The application must record commands to a buffer before they are queued up for execution.

The command buffer needs to have its state specified by the Pipeline configuration before you run any commands that would be affected. It is possible to bind different Pipeline objects in between, but this is the developer’s responsibility.

Command buffers are allocated from a memory pool called a command pool. Command pools are linked to a specific queue family, and so a command buffer allocated from a specific command pool can only be submitted to queues from that queue family.

Render Pass¶

A render pass refers to a “chunk of work” needed to render data into a specific framebuffer from start to finish. In a practical sense, it is everything contained within a command buffer from the vkCmdBeginRenderPass and vkCmdEndRenderPass. As can be seen in the code, there is a lot that needs to be configured within that pass, such as loading and storing data. There is a separate VkRenderPass object which contains the information or blueprint for a Framebuffer object. In this sense, one Renderpass can yield multiple Framebuffers. The importance of this is that compatible VkPipeline objects can be used with these Renderpasses to render into any valid Framebuffer. The Pipeline requires information about which render pass object it is compatible with on creation. This is so that each stage in the pipeline is aware of what data the framebuffer requires and what already exists.

Attachments are part of the Framebuffer, but their descriptors are relevant for the render pass. Attachments include Color, Depth, Stencil, Input, and Resolve. These values from the framebuffer pixels are written into the relevant attachments. These same images can be bound as Input attachments and accessed as input values by other render passes and subpasses.

Synchronisation¶

Because Vulkan is a low-level API, synchronisation is left almost entirely up to the developer. While this helps boost performance, it can be daunting for those who do not have much experience in managing their application’s synchronisation at this granularity. The main reason Vulkan does not guarantee much order in command buffer execution is due to the need to achieve as much parallelism as possible. Vulkan’s fine-grained synchronisation mechanisms can allow a well-designed application to achieve maximum performance from a GPU by considering its parallel architecture.

Generally, most Vulkan operations can overlap, but there are some order-sensitive actions; acquiring an image from the swapchain, rendering to that image, and then presenting that image back to the surface is something that needs to occur in that exact order.

Vulkan provides four types of synchronisation objects to the developer: fences, semaphores, and events. These will each be described in more detail in Creating the Synchronisation Objects.